Bundle Experiments at Buffer: Part 1

Alfred Lua / Written on 20 May 2020

Hi there,

In case you missed last week's email, Weekly Notes is a new addition to your Yeti Distro subscription. They are my private notes on the things I'm working on at Buffer and on the side—available for paying subscribers only. You will receive them every Wednesday morning (US).

Last week, I shared the job description I wrote for my product marketing role at Buffer.

Here is this week's note:

From one product to three products to...

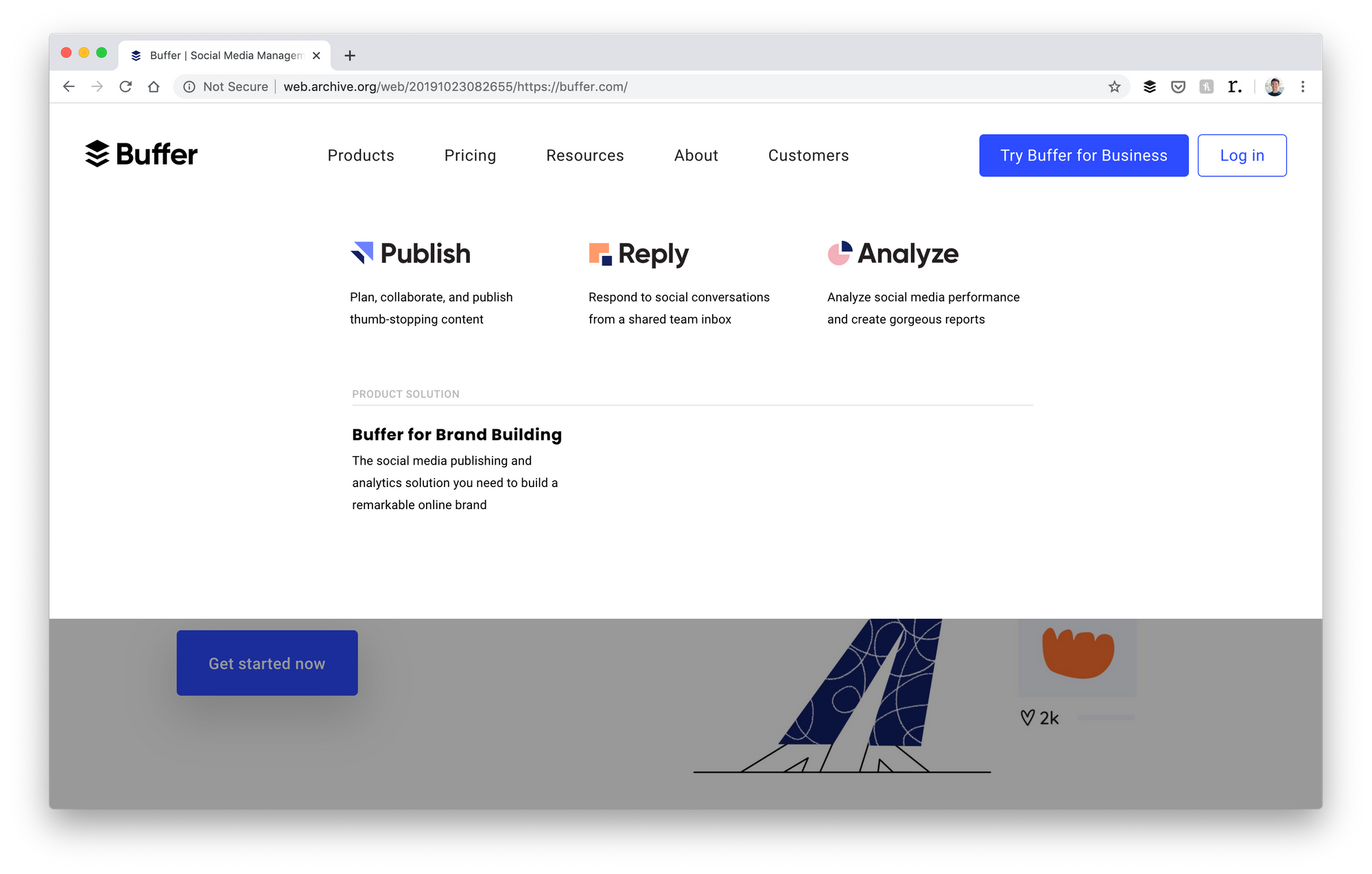

For about eight years, Buffer only had one product: the social media management tool, Buffer. In 2016, we acquired Respondly and built it into Reply, a social media customer support tool. We also took analytics out of our flagship product and developed it into a standalone social media analytics tool, Analyze. Our flagship product was rebranded as Publish.

We now have three products that help customers solve three "jobs":

- Publishing their social media content

- Analyzing their social media performance

- Replying to comments on their social media posts

The idea of unbundling social media management into three distinct areas is to give our customers the freedom to mix and match the tools they need rather than paying a huge amount for an all-in-one solution that they might not fully use.

This sounds great but we struggled to execute it well. While we want customers to "mix and match", there was no easy way to do that. From our pricing page, visitors can only start a trial for one of the products, and not multiple products. After they have been directed into their Buffer account, they have to go back to our pricing page or find the other product(s) in their Buffer account to start another trial.

Here's how I described the situation to the team:

Our current experience is a bit like queueing to get a burger and then queueing again to get fries and a drink at McDonald's. 😅

As a product marketer, I felt uncomfortable about making our customers jump through so many hoops. We were asking people to try multiple Buffer products but they couldn't start trials for multiple products at the same time.

So I was eager to make that possible. Bundles came to mind.

There were two other things that motivated me.

- As a marketer myself, I know it makes sense to use Publish and Analyze together [1]. Marketers want to know how their social media content is performing. I believed (and still believe) our customers would find Buffer more valuable when they try Publish and Analyze together and would more likely to subscribe and stay (i.e. higher conversion rate and lifetime value) [2].

- As Analyze's product marketer, I wanted, perhaps selfishly, to increase the number of Analyze trials started. Because our homepage only featured Publish, few visitors found out about Analyze. By packaging Analyze with Publish, a lot more people will know and try Analyze.

Challenges, challenges, challenges

But it wasn't easy to convince the team.

First, while this could increase the number of trials started for Analyze, this could also decrease the number of trials started for Publish because the bundle is more expensive than Publish alone. Publish accounted for 90+ percent of our revenue and we were nervous about doing anything that would affect Publish signups. This was also why for a long time our homepage only featured Publish. But, as mentioned above, I was pretty convinced customers would be better off trying Publish and Analyze together so I thought the tradeoff would be worth it.

Second, because Publish and Analyze were built separately, the experience of using them together was (and still is) disjointed. For example, customers have to connect their social media accounts separately for Publish and Analyze. A lot of engineering work would be required to create a smooth experience. To see if customers would want the bundle (i.e. subscribe to both products), the product experience is an important factor. They might sign up for the bundle because they wanted it but not subscribe because the experience of using them together was subpar. But should we invest months to create a streamlined product experience without knowing whether people actually wanted the bundle? It was understandable because there would be so much to do. For example, we would need multiple signup flows (for those who sign up for Publish only and those who sign up for the bundle), show different in-app messages, send different onboarding emails, etc.

I was personally convinced bundling is the way to go but I couldn't make a convincing case for it.

This is one of the biggest career lessons for me: It's not enough to have the right idea or an idea that turns out to be right. I need to inspire others to think it is the right idea and want to work on it.

With the help of a few teammates, I eventually convinced the team to run a short two-week experiment on a less obvious part of our marketing site. The goal was just to test the positioning of the bundle to gather any indication of interest before we experiment on more prominent places of our marketing site.

At that point, we didn't even have an A/B testing framework and could only do a monitored rollout. A monitored rollout is implementing the changes for a period of time (two weeks for us) and reverting the changes before comparing the metrics with the prior two weeks ("control group"). This is usually less accurate than an A/B test because something could happen during the experiment period, which would affect the experiment group but not the control group. Even though there are data analysis methods to reduce the "noise" in the results, A/B tests are generally preferred because anything that happens during the experiment period will equally affect the control and experiment groups.

Fortunately, we managed to build an A/B testing framework, A/Bert, the day before the designated date for the experiment. (Shoutout to my teammates, Gisete Kindahl, Federico Weber, and Matt Allen, for pulling it off.)

Shaping the experiment

Eventually, we decided on a "small" experiment that still required a bit of work [3].

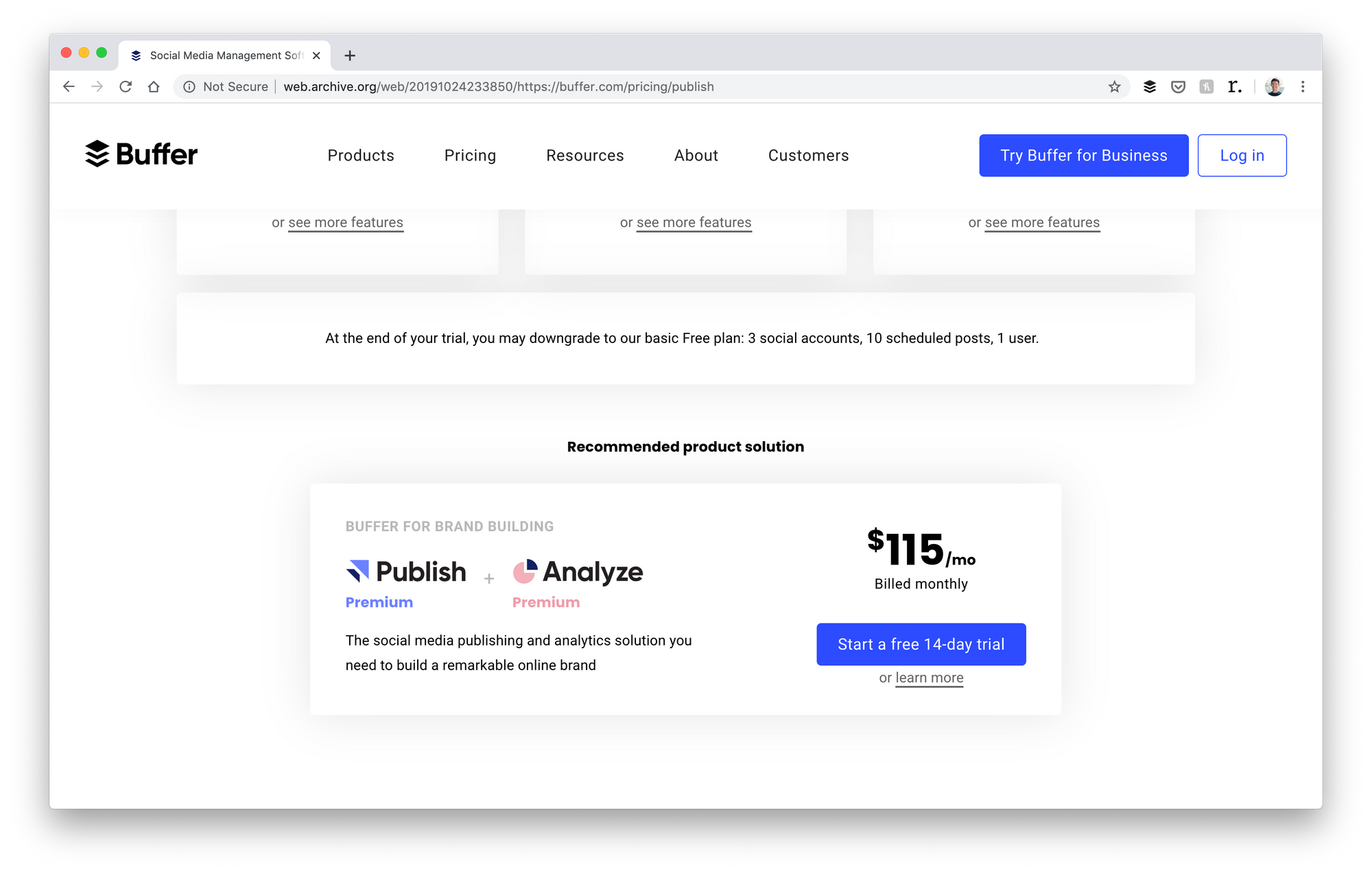

First, we'd show visitors the bundle on the "Products" dropdown menu on the top navigation of our homepage and below the pricing cards on our pricing page.

You can browse the website via Internet Archive. When visitors click on it, they will be directed to the bundle page, where they can start a Publish + Analyze trial with just one click.

From that point on, the experience remained the same. They would be onboarded to Publish, and only when they click on Analyze in their Buffer account would they be asked to set up their social accounts in Analyze. This was so that we didn't have to make major changes to the project before knowing whether people wanted the bundle. I personally would have preferred improving the experience but I understood we needed at least some data to feel comfortable investing more effort (at the scale of months) into that. It was a compromise I accepted to move the project forward.

To make sure we run a proper experiment, I worked with Matt, our data scientist, to design the experiment. The entire experiment hinged on the hypothesis. It describes the experiment (in specific details) and ultimately determines whether the experiment is a success.

Here was the hypothesis for the experiment:

If we offer an easier, more convenient way for new-to-Buffer users to create a Buffer account and trial Publish & Analyze all at the same time (ie, create a Buffer account, signup for Analyze, signup for Publish, start an Analyze trial, and start a Publish trial all in one workflow)

Then we will see an increase in the number of users paying for both products

Because we believe our customers want a publishing and analytics tool — and even though people can technically start a trial for both Publish and Analyze on their own, it seems there’s too much friction preventing them from doing so.

We ran the experiment for two weeks, then waited 28 days before we analyzed the results. This is because our data team found that the majority of Buffer customers subscribed within 28 days of starting a trial. If they didn't convert by then, it's unlikely they would afterward.

A failed experiment but...

Here was the summary of the results I shared with the team:

Good news: More Analyze trials, more Analyze subscription, higher MRR per account — People who saw the self-serve solution ended up having a higher MRR value per account. This is because they started more Analyze trials than those who didn't see the solution and the trial conversion rates for Analyze were the same for both groups = more Analyze subscriptions.

The cause might be an increased awareness of Analyze via the solution offering, and not the convenience of the solution offering — The number of users who end up subscribing to both products are about the same, whether they sawthe self-serve solution or not. Of those who saw the solution and ended up subscribing to both products, only 3 users started a solution trial; the rest (~30) started their Publish and Analyze trials separately (e.g. AppShell).

Positive results, more testing required — While this feels like a net positive signal, we still need to figure out the best way to offer our solutions. Matt recommends we test further by (1) driving more traffic to the experiment landing page, (2) refining the messaging on the experiment landing page, and (3) changing the solutions offered.

Caveat — To reduce the scope of the experiment, we didn't have shared social account connection, centralized billing, or combined in-app onboarding, which could have impacted the solution trial conversion. The Core team has made nice progress on the connection and billing fronts!

You can read the full analysis by Matt here.

While we saw positive results, it was a failed experiment because it did not meet the success metric (more users paying for Publish and Analyze). But the good news was this gave me some data to push the project further and, I think, made people more open to the bundle concept.

I really wanted the bundles to work so I wanted to test with a bigger group and get more results. And by a weird stroke of luck, a series of events happened and made it possible for me to run a bigger experiment. We started to see higher churn from Publish customers and lower churn from customers who subscribe to Publish and Analyze. The Publish product manager left, and the rest of the team were more open to experiments that might reduce the number of Publish trial starts.

“Luck is what happens when preparation meets opportunity.” ― Seneca

I started working on a new experiment: Product Solutions V2.

More on that next week!

P.S. I would be remiss if I didn't acknowledge everyone who was involved in the "small" experiment: Julia Jaskólska (design), Gisete Kindahl (marketing site), Tigran Hakobyan, Mike San Roman, Federico Weber, Phil Miguet (engineering), Matt Allen (data), Tom Redman (product), and Hannah Voice (advocacy). Thank you!

[1]: Reply didn't fit in because it is mainly used by customer support teams while Publish and Analyze are used by marketers.

[2]: In hindsight, this turned out to be quite accurate. We discovered that customers who subscribe to Publish and Analyze have a lifetime value four times higher than customers who only subscribe to Publish. I should state I was neither the first nor the only person who thought of this.

[3]: Many software projects require a lot more work than it seems on the surface. This was no different. We had to figure out how to track and measure the results and think through a lot of edge cases. What if someone who is subscribed to Publish, tries to start a bundle trial? Are they considered a bundle user? If someone has trialed Publish or Analyze before but didn't convert, can they still start a bundle trial? What if they are already subscribed to a higher Publish plan but not Analyze?